AWS ECS Architecture Diagrams

If you are running containerised workloads on AWS ECS, you will appreciate the benefits of visualising your clusters, services and tasks using this...

In this Post we will look at a high level overview of all the different AWS container services you can use on AWS. If you want to run a containerized application on AWS you have multiple options to choose from depending on your application requirements, AWS Security and orchestration software preferences.

We will take a look at ECS (Elastic Container Service) and what it's used for then we'll compare it to EKS (Elastic Kubernetes Service)

We will also look at different ways of running containers using manually provisioned EC2 instances and container runtime options like Docker or letting AWS handle the underlaying hosting infrastructure using AWS Fargate and finally we will discuss the ECR service (Elastic Container Registry)

Running Applications

If we think about a micro service application scenario, if you're developing microservices you would typically need containers for each microservice. Containers can and do scale pretty quickly. You may want to create replicas, have different third-party services and applications for your micro service application, like messaging systems, authentication services etc so you end up with a number of containerised workloads that you need to deploy on some hosting infrastructure.

In this post we're talking about what virtual service options are available on AWS.

Let's say you have created 10 containers for each your 10 micro services plus you have an additional five other containers that your micro services use (which run different applications or services)

If you deploy all of those manually on AWS EC2 instances which have docker or another container runtime installed on them, you suddenly have a lot of moving parts to manage. Typically you would use a Container Orchestration service to manage your containers.

Orchestration

Orchestration

Once you have deployed your container images how do you manage them? Now you have a collection of all these virtual servers (EC2 Instances) that are running all your docker containers, how do you know how much resource capacity you still have remaining on these machines? How do you know where to schedule the next container, or which containers have stopped running or have run out of resources and died.

Do you manually restart each container? If you have multiple replicas of an application container you don't need any more how do you get rid of them.

These are all things that you need to manage when you have dozens or maybe hundreds of containers that you need to manage on your AWS infrastructure.

The solution is to utilise a container orchestration tool to automate the process. Some of the most popular tools for this include

ECS is one of the container services that AWS offers and it is essentially a container Orchestration service which will manage the whole life cycle of a container. ECS is used to start and stop containers, reschedule or restart containers and handle the load balancing. ECS automates these processes.

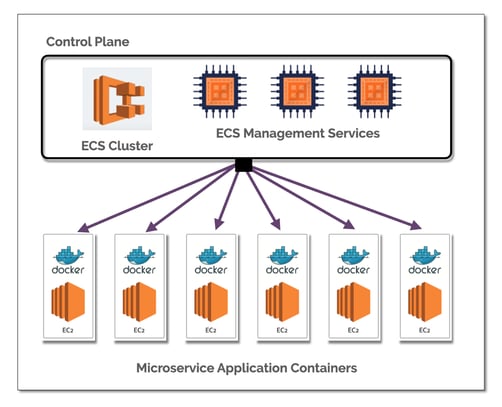

ECS Clusters

An ECS cluster is the control layer that contains all the services required to manage and communicate with individual containers running on the lower level EC2 instances that belong to a cluster. The cluster is essentially managing the whole life cycle of a container from being started scheduled to being removed.

When you create ECS cluster you establish the control plane with the management services, but the containers themselves need to run on separate EC2 instances. You would create an EC2 instance that will host the containers which is connected to the ECS Cluster. The EC2 instance is not isolated and managed manually but is connected to the ECS cluster. The EC2 instance will require a container runtime application like Docker to execute the container payload.

ECS agents are also installed in the EC2 instance, and this way the cluster control plane (ECS processes) can communicate with each individual EC2 instance.

The Cluster helps you manage all your containers in one place and automate some of these complex container management processes.

However this approach isn’t fully managed and automated. You still have to configure amd monitor the actual virtual machines (the EC2 instances) You still have to create the EC2 instances manually, you have to join them to the ECS cluster and when you schedule a new container you have to make sure that you have enough EC2 instances and resources to schedule the next container.

There is also the Virtual Machine server operating system to consider, and you also have the docker runtime and the ecs agent to manage.

The ECS Cluster approach simplifies the management of your container workloads providing the ability to automate the starting, stopping, scaling and monitoring of your containers, however it still requires you to manually provision the underlying infrastructure (EC2 Instances etc)

Much in the same way you delegate container management to ECS, it is possible to delegate the provisioning and management of the underlying AWS hosting infrastructure using:

AWS Fargate

AWS Fargate is an alternative to manually provisioned EC2 instances. So instead of creating and provisioning EC2 instances, installing container runtimes like Docker and the ECS agent and then connecting that to your ECS cluster you can use the Fargate interface

Fargate will take care of spinning up the virtual machines to run your containers.

Fargate is a serverless way to launch containers. You simply take a container that you want to deploy on AWS and you tell AWS here's my container and you hand it over to Fargate.

From within the ECS control plane, Fargate will then take your container, it will analyze it by looking at your container/application, working out how many resources your container needs to run. It estimates how much cpu, ram and storage it needs to be deployed and to run and then Fargate will automatically provision a server with resources for that specific container.

The advantages are you don't need to worry about spinning up new EC2 instances or having enough resources to schedule a new container because you don't have to provision anything beforehand. You always have only the amount of infrastructure resources needed to run your containers and that means you pay only for the resources that your containers are actually using.

This potentially a major advantage over manually provisioned hosting infrastructure because you may be running and paying for EC2 virtual that have no active containers running.

With fargate you only pay for the resources that container is consuming.

Both EC2 and Fargate under ECS fully integrate with the whole AWS ecosystem so you can take advantage of services like cloud watch for monitoring, Elastic Load

Balancing, IAM for permissions and users and VPC segmentation for networking etc

Kubernetes

If you prefer to stick with a container orchestration service that is more familiar, you are probably going to default to Kubernetes. Kubernetes is the most popular container orchestration tool at the time of writing this article.

All is not lost, you can still use AWS infrastructure if you want to use Kubernetes. AWS provides a service called EKS which stands for Elastic Kubernetes Service.

EKS lets you manage a Kubernetes cluster.

The major advantage of EKS over ECS is that ECS has many AWS only dependencies, whereas it would be a lot simpler to migrate a Kubernetes cluster to another cloud provider or hosting platform.

If you do integrate AWS specific services and tools into your container workloads, then obviously the migration task would become more difficult as you would need to replace those components because they will not be available on the target platform.

Another reason for using EKS over ECS is that if you already use Kubernetes which is a much more popular orchestration tool than ECS. You also have access to all the Kubernetes tools and plugins from the Kubernetes ecosystem.

Deploying containers with EKS you create a cluster which is similar to the ECS control plane.

In Kubernetes these are going to be the Master-nodes, so when you create an EKS service or EKS cluster, AWS will provision Kubernetes Master-nodes that already have all the Kubernetes master services pre-installed.

These master-nodes it will automatically replicate across multiple availability zones in the region that you instantiate the cluster.

AWS will also take care of storing the data, replicating it and backing it up properly, so basically master-nodes of Kubernetes are handled by EKS

Now the cluster control plane is set up you will need the worker nodes or the infrastructure that actually runs your containers. Just as in the ECS configuration, you will need to create EC2 instances for the Kubernetes compute fleet (multiple virtual servers) and then connect them to EKS.

Now you have the master-nodes and worker node group connected, you have a complete Kubernetes cluster.

Once the cluster is established, you can connect to the cluster using the cubectl command and start deploying containers inside that cluster.

You can group your worker nodes into node groups and that node group can handle some of the heavy lifting for you and basically make it easier for you to configure new worker nodes for your cluster.

If you want to delegate hosting infrastructure to AWS for an EKS cluster you can also use Fargate instead of EC2 instances so your underlying infrastructure is scaled up and down automatically.

AWS ECR

There is one more container service on AWS which is the elastic container registry (ECR)

ECR is a repository for container images, an alternative to Docker Hub or Nexus Docker Repository where you can store your docker images.

Being part of the AWS ecosystem, using ECR makes it easier to integrate with other AWS services.

You can automatically get notified when a new version of an image gets pushed to the registry and then automatically download that into your cluster.

ECR is fairly straightforward to use, you can create private repositories for your different docker or application images and you can start pushing different versions of container images to your clusters.

So to recap, you can utilise AWS ECS and manually provision EC2 instances to run your containers, or use Fargate to automate the provisioning of hosting infrastructure based on the requirements of the container within the ECS cluster which is all native to the AWS ecosystem.

You can also utilise AWS EKS to establish a more portable Kubernetes cluster with the choice of manually provisioning EC2 Instances for the worker nodes, or delegate the provisioning of hosting infrastructure to Fargate within the EKS cluster.

Lastly you can take advantage of the AWS ECR to host and manage the deployment your Docker images to either ECS or EKS.

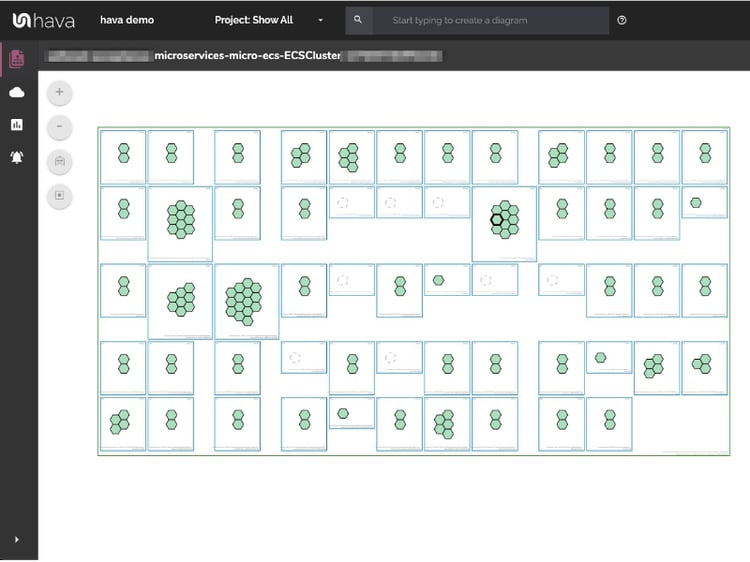

If you are building containerized applications on AWS, Hava provides a visualization of your container work loads via the "Container View"

The container view shows your ECS Clusters and a colour coded status of each ECS task along with information about the associated container instances.

You can check out the container view by taking Hava for a free test drive below.

If you are running containerised workloads on AWS ECS, you will appreciate the benefits of visualising your clusters, services and tasks using this...

AWS offers a huge range of compute options like EC2 instances, ECS and EKS Containers or Lambda Serverless. But which one is right for your...

Visualise your container workloads with the Hava AWS Container Diagram generator. Find out more...