Amazon AWS S3 Fundamentals

Amazon Simple Storage Service S3 allows you to store objects on the same highly available network Amazon uses. In this post we explore the...

The AWS cloud practitioner essentials module on storage and databases covers the key concepts of storage and the many options available on AWS including Amazon elastic block store (EBS), Amazon S3, Amazon elastic file system (EFS) and the RDS and DynamoDB database options.

When you are running applications on EC2 instances, you will need somewhere to store data. Depending on the EC2 instance type you are running it will most likely come with instance level storage just like a hard drive in a laptop. This block level storage is known as instance store volumes.

You can read and write to instance store volumes for the life of the EC2 instance, however you need to be aware that when you stop or terminate and EC2 instance, the volumes attached to it are also deleted. So for example if you have an EC2 instance that is terminated during a scaling in event, all the data in the instance’s store volumes will be lost.

When a new instance is deployed, a fresh set of instance storage is created on the underlying hardware hosting your new EC2 instance, so you should never store data you wish to keep in instance storage volumes.

To retain data beyond the lifecycle of any single EC2 instance you can use a service called Amazon EBS. You use this service to create virtual hard drives called EBS volumes that you can attach to your EC2 instances. These volumes aren’t tied to the underlying hardware hosting your EC2 instance, so as your compute instances come and go, the data in the EBS volumes persists.

Because your EBS volumes are created on a physical hard drive, it is important that you back up your EBS volumes. This is done by scheduling snapshots of your volumes. These incremental backups are created on separate hardware, so should the hard drive hosting your EBS volume fail (which isn’t unheard of) then you have the data secured in a snapshot which is ready to restore.

Amazon S3 is an object storage service that allows you to store documents, images, video and most other file types in a number of different storage options.

Objects are stored in buckets that are similar to folders used on most operating systems.

You can set policies on your buckets to control access, set the access type from instant to cheaper levels of archival storage that take longer to retrieve data.

You can set object lifecycle properties that automatically move objects to archival storage based on your policies.

S3 is practically unlimited, you can store objects at any scale, with individual objects limited to a 5TB file size.

You can enable versioning on individual buckets, so if objects are overwritten you can access previous versions of the file.

Amazon S3 Standard

This tier offers 99.999999999% durability over a 12 month period, meaning that the percentage chance that an object will be intact and available for access is incredibly high. Behind the scenes AWS replicate data across 3 physical locations, so can sustain a complete failure of two locations without any data loss.

Data on the S3 standard tier is immediately available so can be used for frequently accessed files and data objects.

S3 is fast enough to be used to host static HTML websites. This capability is built into S3 and by simply ticking a box on the bucket settings you can turn the files in a bucket into a website.

S3 Standard-Infrequent Access - S3 Standard-IA

This storage tier is designed for longer term storage of objects that are not accessed frequently, but can be accessed quickly when needed. This tier is ideal for storing backups. The chances are you may never need to access these files, but when you need them, you need them in a hurry.

S3 Glacier

Glacier is designed for archival storage of objects you don’t need immediate access to. These might be governance of financial records that you need to keep for several years in case you are subjected to an audit. Glacier is significantly cheaper than standard storage with the compromise being it can take several hours to retrieve data from this cold storage.

You can create vaults within glacier with lock policies to protect important data and you can apply a WORM (write once/ read many) policy to prevent changes.

You can manually move data to Glacier, or you can set bucket lifecycle policies to move data from Standard storage to Glacier after specific time periods.

There is also an option to move data from S3 Glacier to S3 Glacier Deep Archive which is significantly cheaper again, but can take a long time to retrieve data from.

Both services allow you to store files and data so why would you choose one over the other.

S3 is object storage, so when you are updating an object the entire file will be uploaded to S3. It is also web enabled with each object having its own url and access control, making it ideal for storing application assets. Because S3 is replicated behind the scenes, backups are built in and the storage costs are significantly cheaper than the same data stored in EBS.

S3 is also serverless, so no EC2 instance is required. If you are storing objects that are viewed in their entirety, like an image file or are making minimal changes to objects, S3 is the best option.

EBS is block storage, so when you make changes to a file, just the changes are updated. Files are broken into small blocks so that when a change is made, only the blocks that change need to be updated, not the entire file

Let’s say you have a video editing application and the video files you work with are extremely large, say 100GB. EBS would be a better choice for storage. As you edit the video only the changed blocks need to be written back to the original video file. If you used S3 then the entire 100GB would need to be uploaded as you made changes.

If you are making frequent changes to files with constant read/write/update activity or working with frequently changing large files then EBS is probably the best option

EBS requires you to provision EC2 instances in the same Availability Zone in order to connect and once your EBS volume is full you need to manually resolve the issue, it won’t automatically scale.

You can use EBS to store files, databases and host applications.

EFS is a fully managed file system that you can connect to multiple EC2 instances. You can read and write simultaneously to EFS which manages files via the underlying Linux file system.

Unlike EBS, EFS is a regional resource, so you can access the file system from EC2 instances in any AZ in the same region.

EFS as the name suggests is elastic, so will scale in or out as your storage needs dictate.

On premise servers can also access EFS directly using AWS Direct Connect

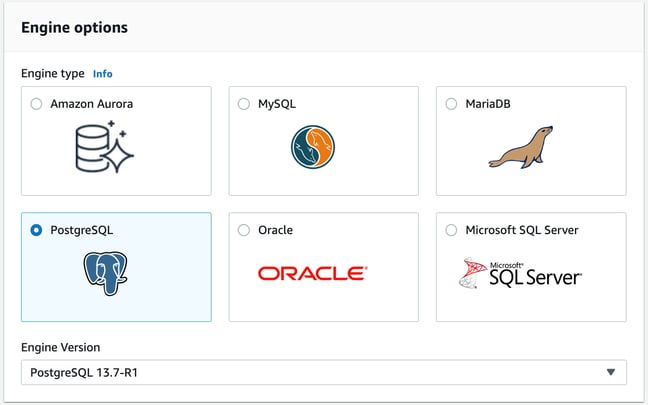

As you would expect, on top of simple object and file storage, AWS has plenty of relational database options. As well as supporting most of the popular RDBMS systems like MySQL, PostgreSQL, Oracle and Microsoft SQL Server, AWS also has managed database services on offer.

Most traditional on premise databases can be migrated to the cloud and run inside EC2 instances where you control the specifications of the instance hosting your RDBMS

In this scenario you manage the operating system, patching and updates to the software running in the EC2 instance.

Another option is to leverage Amazon RDS which is a managed rdbms service from AWS.

RDS supports all the major database engines but comes with major benefits like:

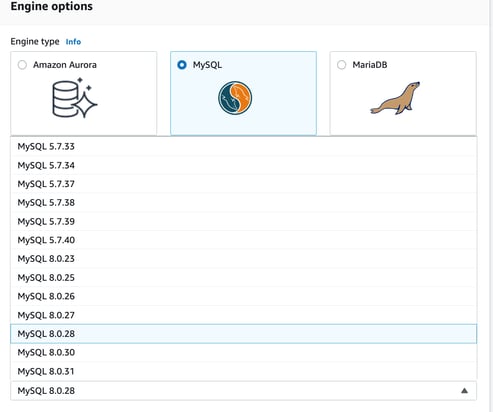

Currently you can select from Amazon Aurora, MySQL, MariaDB, PostgreSQL, Oracle and Microsoft SQl Server

Then within each database type, you can select from dozens of different versions which can be useful if you are migrating and want to match your on prem database version exactly.

Aurora is probably the most managed database offering from AWS. You can choose from MySQL and PostgreSQL which AWS claim are priced at 1/10th the cost of commercial databases.

Aurora databases are replicated across several physical locations so at any one time you have 6 copies of your database in existence.

You can also configure up to 15 read replicas of your database, so you can disperse the read workload across different edge locations.

In terms of data security, Aurora continuously backs up your data to Amazon S3 with time codes so you can perform a point-in-time restore.

Amazon DynamoDB is a serverless non-relational SQL free database. Instead of creating tables and rigid schema, populating those tables and using SQL to query and return results, with DynamoDB you create tables with attributes and store data.

AWS manages the underlying storage, scaling, replication with DynamoDB data held in multiple availability zones and on physically mirrored hard drives.

The database is massively scalable and responds within a millisecond making it ideal for high traffic applications where performance is critical. All the performance and scaling operations are taken care of by AWS so all you have to worry about is your data.

The schema on DynamoDB is flexible. You can add and remove attributes of an existing table at any time and not all items in a table need to have the same attributes.

Certain attributes within the tables are designated as keys and it is the keys that you use to perform queries and return results. This data access method does not use SQL or its associated complex structured queries which can be quicker since all the queried keys are in the same table.

Redshift is AWS’ data warehouse solution. Designed to hold massive amounts of unstructured data ingested from multiple sources, Redshift allows you to perform business intelligence operations on historical data to answer business questions.

Redshift is designed to handle Petabytes of data from a wide variety of sources like sales or inventory transactional information right through to industrial IoT sensors and data lakes.

AWS DMS allows you to migrate existing databases to AWS hosted databases. The source and destination database types can be the same or different with the migration process taking care of the schema creation and code changes required to run the new database type.

The source database whether it is running on on-premise servers or the cloud remains fully operational during the migration process which greatly reduces downtime.

The process of migrating to the same type of database is reasonably straight forward. If you were migrating an on premise MySQL source database to an Amazon RDS target the schema structures, data types and database code would be the same thus simplifying the process.

When the source and target databases are of different types, the migration becomes a two step process. The schema, data types and database code are most likely different when moving to a different database, so you need to use the Schema Conversion tool to map the existing schema to the required format at which point you can use DMS to start migrating the data.

AWS DMS is also regularly used for development and test database migrations, database consolidation and continuous database replication.

So that's a run through the topics covered in the Storage a Databases module of AWS Cloud Practitioner Essentials and I hope you gained a little insight into the variety of data storage options available from AWS.

If you are building networks on AWS and not using hava.io to diagram them automatically, you can grab a free 14 day trial of the fully featured teams plan. Learn more using the button below.

Amazon Simple Storage Service S3 allows you to store objects on the same highly available network Amazon uses. In this post we explore the...

Monitoring usage and applying Lifecycle Policies to your Amazon S3 buckets and objects so that they end up in the most cost effective storage tier is...

Selecting a database from the daunting array of AWS options can be confusing. In this article, we explore what options you can select from and what...