Cloud Computing Glossary of Terms

A comprehensive glossary of cloud computing terms / terminology drawn from the AWS, Azure and GCP cloud computing platforms.

The AWS Cloud Practitioner Essentials is a course and examination designed to help you understand the essentials of AWS.

The course covers an introduction, compute in the cloud, AWS global infrastructure, networking, storage and databases, security, monitoring and analytics, pricing and support, migration and innovation, the cloud journey and finally certification

The introduction explains the core concept of cloud computing and the way that you need only pay for what you need, when you need it. The ability to scale in times of peak demand, like a sales event is a major advantage of cloud over on premise servers. As demand rises you can spin up new resources to service the demand and then as demand subsides, you can scale back.

AWS view the definition of “Cloud” as the on-demand delivery of IT resources over the internet with Pay-as-you-go pricing.

Using AWS you can request additional resources without prior arrangements. You simply provision what you need as you need it. If you require terabytes of additional storage or a few hundred new virtual servers, AWS has the ability to service that demand instantly, which is not possible if you were running IT infrastructure in house or in your own data center.

With PAYG pricing, not only can you provision resources as you need them, you also have the ability to turn off resources not in use. For instance developer environments may not be needed over weekends or overnight, so can be stopped when not in use to save money.

With Cloud computing you trade upfront hardware, software and maintenance expenses for variable consumption based expense. This means you avoid large investments in data centre infrastructure and servers before you know exactly how they will be utilised.

With the need for your own data centre removed, you avoid the overheads associated with running and maintaining hardware, buildings and security so you can focus on your applications and customers.

You no longer have to guess your usage and capacity, you can throw away your crystal ball and simply scale your compute instances in and out as needed.

Because AWS infrastucture benefits from the economy of scale, the PAYG pricing is much lower and you have the ability to experiment and test out new solutions on a wide variety of compute instances. You might need to wait weeks or months for new resources in your own data centre, and even then once online, if the equipment doesn’t perform as expected, or doesn’t match demand, you could be stuck with useless servers and more delays.

With cloud, you can spin up new compute instances from a wide selection of CPU, Memory and storage options to run experiments in minutes. You might be testing application performance or response times and if things don’t go according to plan, you can terminate the instance and select another one to test out.

As well as the different types of compute instances, you can also specify different global regions to deploy into. Provisioning servers closer to your users can help reduce latency and provide a faster user experience.

The next module in AWS Cloud practitioner essentials revolves around compute. Mainly elastic compute cloud (EC2), elastic load balancing and auto scaling. The module also touches on messaging services SNS, SQS and the differences.

Since we have already covered the benefits of the cloud, we already know that AWS have built the data centers and rammed them full of hardware and have compute capacity waiting to be used so you don’t need to purchase hardware up front and install servers into a rented or owned data centers.

All the hard work has already been done, so you can provision the EC2 instances you need. AWS virtualisation technology will provide a virtual server ready for you to start working with in a matter of minutes. You only need to pay for EC2 instances while they are running and you are not locked in to any on-demand services.

You can stop instances not being used, or terminate them at any time which removes the virtual instance and returns the physical server capacity to the available pool of resources in the AWS data center for other businesses to use.

A hypervisor controls the virtual machines running on the physical data center hardware, so as you request a virtual machine, the hypervisor finds capacity on physical server with the appropriate memory and cpu for the virtual machine image you have selected (EC2 Instance type).

While the physical servers are multi tenancy and share resources between EC2 instances, individual instances are separate and not aware of each other, providing the security you would expect from advanced virtualization.

When you provision a new EC2 instance, you don’t need to worry about what server it is running on, or what else is running on that server. You do however get control over what runs on the instance. You can select from different versions of Linux or Windows and can choose from a selection of preconfigured images running different web apps, business apps, databases and third party software or they can spin up with nothing pre installed ready for you to deploy your own applications.

Once running you are not looked into the instance specifications you initially selected. If you find you need more memory or CPU, you can change the configuration (vertical scaling) and the same is true in reverse, if you need less memory or CPU you can reduce the specs and save a bit of money.

In terms of access to your VPC, you decide how the networking is configured. You may want public access or strictly private traffic. You get to decide how the VPC is accessed.

There are a large number of EC2 instance types with different specifications and capabilities to match lots of different compute workloads.

Instance types are grouped together in different “instance families”

General Purpose EC2 Instances - provide a balance of compute, memory and network resources making them ideal for application servers, gaming servers, enterprise application back ends and small to medium database servers. These are good where an even balance of network, compute and memory is required.

Compute Optimized EC2 Instances - are the correct choice for compute intensive applications that benefit from high performance CPUs such as web, application and gaming servers and high volume batch transaction processing.

Memory Optimized EC2 Instances - ideal for processing large data sets in memory and other memory intensive workloads.

Accelerated Computing EC2 Instances - These use hardware acceleration or coprocessors to perform computational tasks external to the CPU. This could include floating-point number calculations and graphics processing and could be used for game streaming or intense graphics.

Storage Optimized EC2 Instances - Used for workloads that involve high sequential read/write access to local storage such as distributed file systems, data warehouses and high frequency transaction processing. Anywhere that high IOPS is required, storage optimized EC2 instance types are the correct choice.

You can choose from several pricing options when it comes to EC2 Instances.

On-Demand Pricing - This is where you only pay for the duration that your instance runs for. Pricing can be charged hourly or by the minute depending on the specifications and operating system selected. This is ideal for testing out instance types with no long term commitment or communication with AWS required. On demand pricing also lets you discover your actual usage which you can then use to evaluate if pricing on a savings plan is better.

Savings Plan Pricing - You can get much lower pricing (up to 72%) for an EC2 instance based on a minimum $/hr usage commitment which is locked in for a 1 year or 3 year contract.

Reserved Instances - Offer deep discounts for a 1 or 3 year commitment for EC2 instances which can be prepaid in full or partially pre-paid.

Spot Instances - Allows you to utilise unused capacity on AWS servers for up to a 90% discount, with the only catch being AWS can terminate your instance with a 2 minute warning should they require the server capacity for someone else. So probably only useful if you have processing that can tolerate lengthy interruptions.

Dedicated Hosts - This is a server where you can provision EC2 instances and is exclusively for your use only, not multi tenant.

The scalability and elasticity of EC2 compute is what sets it apart from the traditional on premise hardware approach. Being able to scale out to meet increased demand and then scale back in when demand drops off is the ‘elastic’ nature of scaling and is what gives EC2 it’s name.

If you are like most businesses, your customer activity will vary month to month and season to season. You might for instance have a massive surge in sales in the lead up to Christmas or other marketing calendar events during the year. The problem with traditional on premise IT solutions is knowing what hardware you require to service that peak demand.

Then you may find yourself over-engineered for the non-peak times, leaving you with expensive equipment running at a fraction of its potential.

There are a couple of ways you can improve the performance of your application that’s failing to meet demand.

Scale up - this is where you increase the power of your EC2 instance by adding CPU or Memory to the instance specifications.

Scale out - this is where you add more EC2 instances in response to higher demand. As load increases, more instances are added to process requests, then as those additional instances fall idle due to decreased demand, they can be terminated.

There are two approaches to auto scaling. Predictive and Dynamic.

Dynamic scaling responds to changing demand based on triggers like cpu usage over time.

Predictive scaling auto schedules the right number of EC2 instances to be running based on predicted demand.

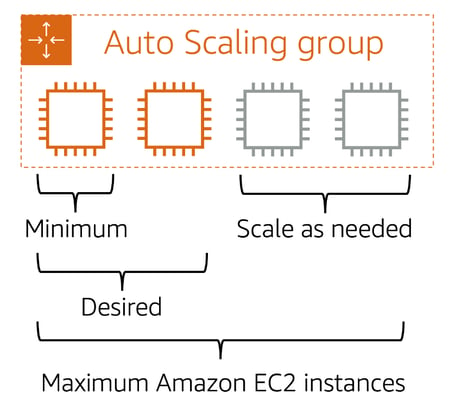

When you create an auto scaling group, you can set the minimum number of EC2 instances that should run, followed by the desired number of instances. While you may only require a single instance for your application or website to function, you may like to run two instances for performance purposes.

Then you can set the maximum number of instances. This will be the total number of instances that AWS will automatically launch in response to demand increases.

When you have multiple instances performing the same tasks, you will need a method of distributing traffic between the instances. An AWS Elastic Load Balancer (ELB) is an application that integrates with EC2 to spread traffic across all running instances.

As your auto scaling group adds instances, the ELB will automatically start sending a portion of traffic to the new instances.

When it comes time to scale in, the ASG will notify the ELB that an instance is terminating. The ELB will pause traffic allowing the EC2 instance to terminate cleanly and will then continue sending traffic evenly across the remaining instances.

While the first thing most people think of when it comes to ELB activity is distributing external traffic, you can also utilise ELBs for controlling traffic between segments of you application.

Say for instance your e-commerce front end has been separated from the back end order processing, it would be possible to have both the front end and back end autoscaling and use an ELB in between to automatically spread the orders coming in to the currently active back end instances.

Another key component of AWS compute is the utilization of queues and messaging. Amazon Simple Queue Service (SQS) is a highly available and robust service that allows software components to communicate.

In the e-commerce example, when the front end application needs to notify the back end that an order needs to be processed, the application would typically need to wait for a backend process to be available to accept the order payload. If the backend processes go offline or are over capacity, this would cause the front end to wait or even time out causing a less than ideal user experience.

To solve the problem, using SQS, you can send order payloads into a queue. Then as backend processes become available, they pick up orders from the queue.

Amazon Simple Notification Service (SNS) is another method of communicating between software components and users. This publish/subscribe (pub/sub) methodology relies on publishing information or payloads to topics.

Subscribers to the topic will then be notified when something is published. There are many ways to subscribe to a SNS topic like web server connections, email addresses, AWS Lambda functions and many more.

While EC2 is great for everything from a basic web server to complex high performance clusters, you still need to be involved with the software deployment, operating system and application patching, setting up auto scaling and designing your architecture to be highly available.

AWS offers several serverless options that removes the architecture design and management layer responsibility, so you can just concentrate on your application code. You don’t need to access or see the underlying instances and infrastructure as AWS fully manages that for you in serverless environments.

![]()

AWS Lambda is one of the serverless options. You create functions that host your app code and set triggers to call and run that code as needed. AWS will take care of the resources and scaling in response to the frequency your Lambda functions are triggered.

AWS provides container orchestration services to enable you to run Docker containers on top of EC2. Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS) both allow you to set up container clusters and tasks.

If you have no desire or need to access the EC2 instances and operating systems hosting your containers, you can use AWS Fargate to host your containers using either ECS or EKS tooling.

AWS define cloud computing as the on-demand delivery of IT resources over the internet with pay-as-you-go pricing. This means that you can make requests for IT resources like compute instances, networking, data storage, analytics, or other types of resources, and then they're made available for you on demand. You don't pay for the resource upfront, Instead, you just provision resources as needed and pay at the end of the month for the resources you have used.

AWS offers services and many categories that you use together to create your application solutions. We covered EC2. With EC2, you can dynamically spin up and spin down virtual servers called EC2 instances. When you launch an EC2 instance, you choose the instance family. The instance family determines the hardware the selected instance runs on.

The category families are general purpose, compute optimized, memory optimized, accelerated computing, and storage optimized.

You can scale your EC2 instances either vertically up by resizing the instance specifications, or horizontally by launching new instances and adding them to the pool. You can set up automated horizontal scaling, using Amazon EC2 Auto Scaling.

Once you've scaled your EC2 instances out horizontally, you need something to distribute the incoming traffic evenly across those instances. This is where the AWS Elastic Load Balancer comes into play.

EC2 instances have different pricing models. There is On-Demand, which is the most flexible and has no contract, spot pricing, which allows you to utilize unused capacity at a discounted rate, Savings Plans or Reserved Instances, which allow you to enter into a contract with AWS to get a discounted rate when you commit to a certain level of usage, and Savings Plans which apply to AWS Lambda, and AWS Fargate, as well as EC2 instances.

We covered messaging services. There is Amazon Simple Queue Service or SQS. This service allows you to decouple system components. Messages remain in the queue until they are either consumed or deleted. Amazon Simple Notification Service or SNS, is used for sending messages like emails, text messages, push notifications, or even HTTP requests on a pub/sub basis. Once a message is published, it is sent to all of the topic subscribers.

We also covered the fact that AWS has different types of compute services beyond just virtual servers like EC2. There are container services like ECS, and there's Amazon Elastic Kubernetes Service (EKS). Both of which are container orchestration tools.

You can use these tools with EC2 instances, but if you don't want to manage that, you can use AWS Fargate, which allows you to run your containers on top of a serverless compute platform.

Then there is AWS Lambda, which allows you to just upload your code, and configure it to run based on triggers. And you only get charged for when the code is actually running. No containers, no virtual machines. Just code and configuration.

Hopefully that sums up the compute section of AWS Cloud Practitioner Essentials and provided a high level overview of the compute options available in AWS.

Once you are building applications and deploying compute and storage instances on AWS, you can use hava.io to automatically generate diagrams of what you have running.

Whether you are just starting out and have a single network you would like to diagram for free, or you have thousands of VPCs under management across hundreds of AWS accounts, Hava can auto generate diagrams of your infrastructure and keep those diagrams up to date automatically, no manual intervention or drag and drop required.

You can open a trial account and start diagramming straight away using the button below.

In the next post in the AWS Cloud Practitioner Essentials series we will cover AWS Global Infrastructure and Reliability

A comprehensive glossary of cloud computing terms / terminology drawn from the AWS, Azure and GCP cloud computing platforms.

Cloud Computing Diagrams are an essential part of cloud computing from an Engineering, DevOps and Management perspective. Read why...

What is AWS CAF? The cloud adoption framework is used by AWS professional services and partners to successfully transition client workloads to the...