Base Image source: AWS Marketplace https://aws.amazon.com/marketplace/solutions/devops#

The evolution of Operational Service Models.

One of the core concepts in DevOps is the preposition of Cattle vs Pets to describe the service model and the importance of architecture resources in your cloud environments.

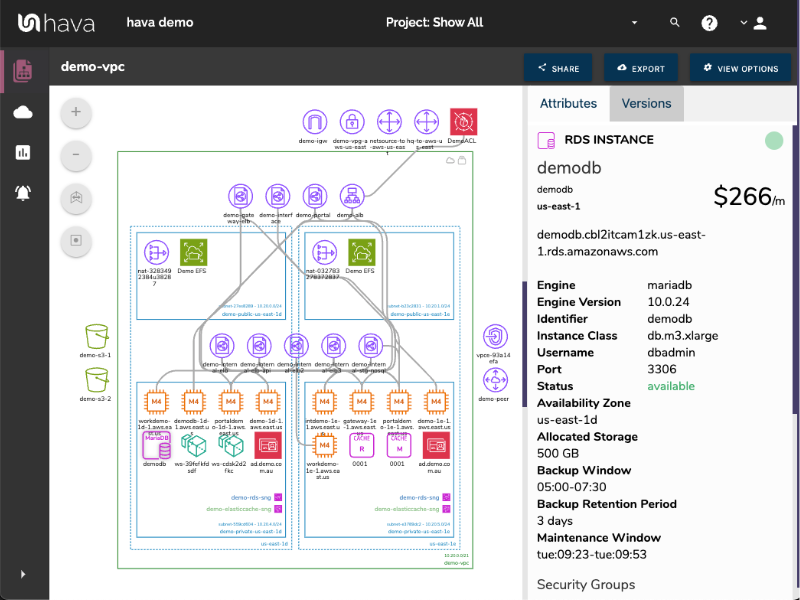

You can easily identify your pets using Hava.

Pets

The "Pets" service model describes carefully tended servers that are lovingly nurtured and given names like they were faithful family pets. Zeus, Apollo, Athena et al are well cared for.

When they start to fail or struggle, you carefully nurse them back to health, scale them up and make them shiny and new once in a while.

Being a mainframe, solitary service, database server or load balancer, when Zeus goes missing, everybody notices.

Cattle

Cattle on the other hand aren't afforded quite the same loving attention as Zeus or Apollo. This service model typically tags servers like svr01 svr02 svr03 etc much the same way cattle ear tags are applied. They are all configured pretty much identically, so that when one gets sick, you simply replace it with another one without a second thought.

A typical use of cattle configurations would be web server arrays, search clusters, no-sql clusters, datastores and big-data clusters.

As the adoption of bare-metal racked cattle servers either on-prem or in data centers became popular, so did the need for automation to control them. Tools like Puppet and Chef enabled Ops to configure fleets of "cattle" using automation.

Cloud IaaS - the dawn of a new age.

With the advent of Infrastructure as a Service (IaaS), developers and operations teams now had access to entirely virtualized IT infrastructure. In 2006, the entire network, storage, compute, memory and CPU infrastructure ecosystem was available through Amazon Web Services (AWS), followed a few years later by Microsoft Azure in 2010 and Google Cloud Platform (GCP) in 2011.

Same cattle, on someone else's (server) farm.

The need to automate and orchestrate the configuration and deployment of cloud solutions gave rise to tools like Salt Stack, Ansible and Terraform. These tools gave Developers and Operations (DevOps) the ability to programmatically deploy infrastructure required to support your applications - a process now know as "Infrastructure as Code".

While the virtualization of the environments required to support applications was now fully automated the ability to containerise applications and the supporting infrastructure required to make applications portable irrespective of the underlying hardware or cloud technology was seen as the next evolution. Early on solutions like Linux Containers, OpenVZ and Docker provided the ability to segregate applications into their own isolated environment without having to virtualize the underlying hardware.

Containers enjoyed an explosion of popularity and Docker evolved into it's own ecosystem and a raft of technologies aimed at allocate resources to containers and deploy them across clusters of cattle servers became available including Kubernetes, Apache Mesos, Swarm and Nomad.

What happened to the Pets?

They are still alive and well. There will always be the need for run-time change and configuration of application servers managed in house or in the cloud. Terraform, Ansible and Chef still look like the most popular platforms chosen by DevOps to manage and deploy infrastructure as code.

Using Containers to Deploy Applications.

For teams adopting a containerized infrastructure methodology, Kubernetes now appears to be the default vehicle to deploy applications on the big three cloud platforms.

Google Kubernetes Engine (GKE), Azure Container Service (AKS) and AWS Elastic Container Service (EKS) are all implementations of Kubernetes scheduling services.

Config Management (CM) vs Container Orchestration (CO)

Although they are not mutually exclusive, heavy reliance on one generally reduces the reliance on the other.

Ansible would be one of the most popular CM tools utilised and basic knowledge would be of benefit, however the advanced skills required to master CO. Docker is more or less the container standard at the moment and the CO mechanism for orchestration is Kubernetes.

It will probably take 6 months to be fully comfortable with the basics of Kubernetes, but worth it as the market dominance over tools like Nomad shows in the advertised preference of Kubernetes when firms are hiring DevOps engineers.

Terraform is also a must have skill. Having the skills to drive tools that deploy infrastructure as code is a definitely a major bonus especially as tools like Terraform feature in almost every modern Ops stack. You don't need to be a master, however it's a skill that is almost assumed that every mid-level Devops engineer would have.

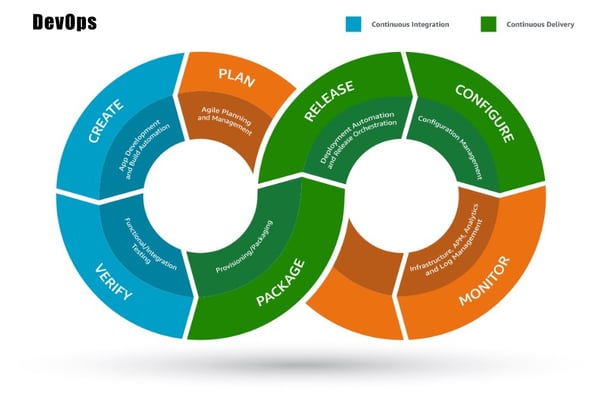

CI / CD Continuous Integration / Continuous Delivery

With the automated deployment of applications across clusters of containers independent of the underlying hardware Developers have the opportunity to deliver code changes more frequently and reliably. CI/CD is an Agile Methodology best practice that enables dev teams to focus on the business requirements, security and code quality as the deployment is automated. The CI systems establish a consistent and automated way to build, package and test code. The fact that the integration process is in place allows developers to commit code changes more frequently.

After the code is committed, CD takes over and distributes to multiple environments such as dev, test and production via automation.

The CI/CD process stores all the environment specific parameters that must be packaged with each delivery and automates the calls to web servers, databases or other servers that may require a restart after application deployment.

Jenkins is probably the most widely used tool in the CI/CD space but has it's challenges. It would be worth getting to grips with it, even if you eventually head down a GitLab, Travis, and CircleCI amongst others.

There are possibly unlimited numbers of combinations of tools to build, configure and deploy code and applications in a "DevOps stack" .

A good foundation if you are starting out would be the ever popular combination of

- Terraform ( to build the infrastructure )

- Ansible ( to configure )

- Jenkins ( to deploy apps )

A greater understanding of AWS and CloudFormation templating is also highly valuable in Devops as is a firm understanding of Git, Docker, Kubernetes, Jira/Confluence, Nexus/Maven, Bash, JSON/Yaml, Powershell... and the list goes on.

Of course, once you've established and deployed your Pets or Cattle the next step is to accurately document your cloud topology. Not only do decent accurate diagrams make it easier to explain what is going on both internally within your DevOps team, but also externally with management and to meet the ever demanding requirements of your security team.

A good AWS architecture diagram that's auto generated (hands free) will also satisfy the most pedantic PCI compliance auditor. Likewise you can use Hava to auto generate GCP and Microsoft Azure diagrams as well as stand alone Kubernetes clusters.

That's what we do, we bring you the benefits of generated cloud documentation.

You are welcome to take hava.io for a free 14 day trial now an start tracking down your pets, no microchip required. Or you can bookmark this site for when you have something built.